TIL: Top AI News of Week 16

💻

AI as Normal Technology

The essay “AI as Normal Technology” by Arvind Narayanan and Sayash Kapoor, presents a perspective that contrasts with both utopian and dystopian narratives surrounding artificial intelligence (AI). Instead of viewing AI as an autonomous, potentially superintelligent entity, the authors argue that AI should be considered a “normal technology”—a tool that, while transformative, remains under human control and integrates gradually into society.

To view AI as normal is not to understate its impact—even transformative, general-purpose technologies such as electricity and the internet are “normal” in our conception. But it is in contrast to both utopian and dystopian visions of the future of AI which have a common tendency to treat it akin to a separate species, a highly autonomous, potentially superintelligent entity.

The statement “AI is normal technology” is three things: a description of current AI, a prediction about the foreseeable future of AI, and a prescription about how we should treat it.

…

A note to readers. This essay has the unusual goal of stating a worldview rather than defending a proposition. The literature on AI superintelligence is copious. We have not tried to give a point-by-point response to potential counter arguments, as that would make the paper several times longer. This paper is merely the initial articulation of our views; we plan to elaborate on them in various follow ups.

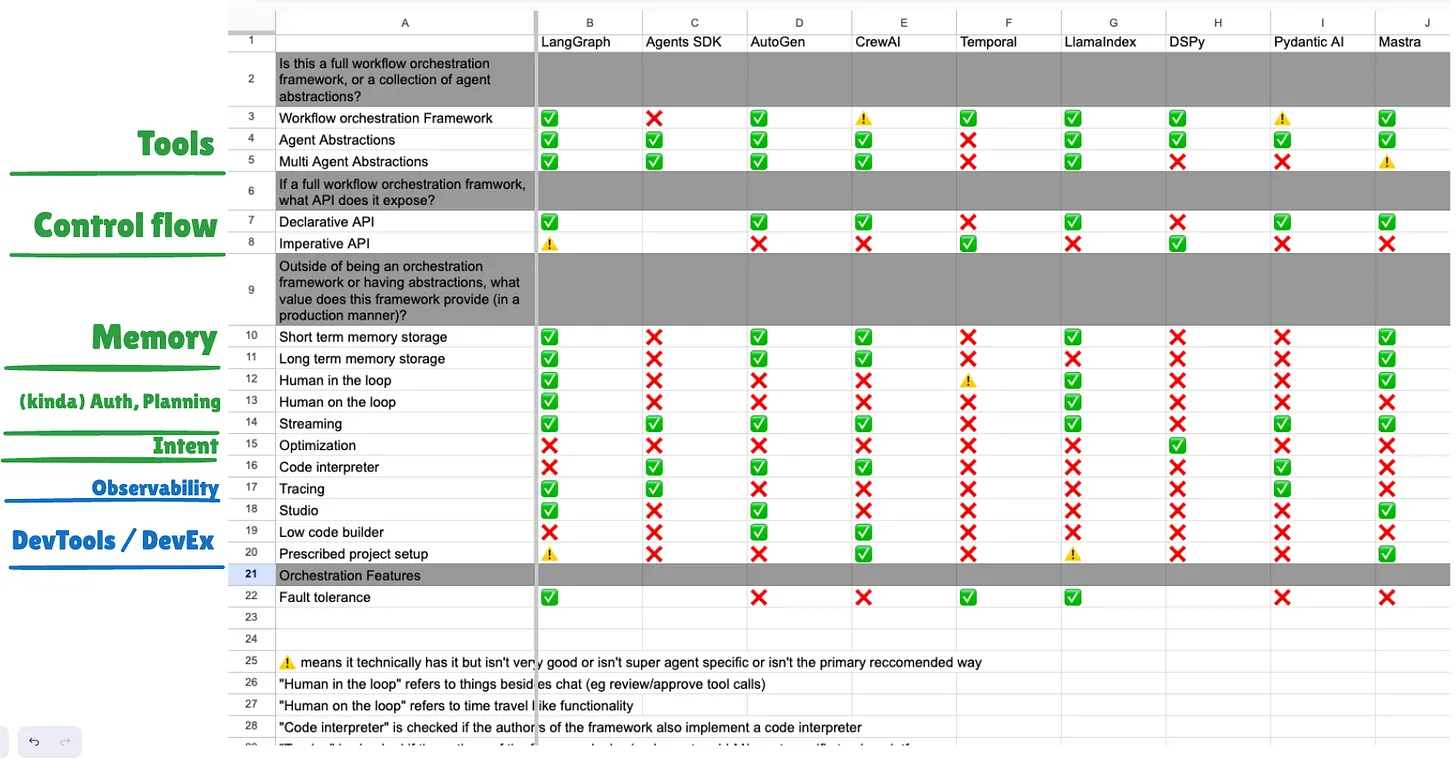

In the Matter of OpenAI vs LangGraph

The article discusses the ongoing debate in the AI community between two approaches: using large language models (LLMs) directly versus integrating them with more structured workflows. This debate is highlighted by the recent release of OpenAI’s “Practical Guide to Building Agents,” which has received mixed reviews compared to Anthropic’s equivalent guide.

At the heart of the battle is a core tension we’ve discussed several times on the pod - team “Big Model take the wheel” vs team “nooooo we need to write code” (what used to be called chains, now it seems the term “workflows” has won).

…

You should read Harrison’s full rebuttal for the argument, but minus the LangGraph specific parts, the argument that stood out best to me was that you can replace every LLM call in a workflow with an agent and still have an agentic system.

- LangChain - How to think about agent frameworks

- OpenAI - A practical guide to building agents

Introducing OpenAI o3 and o4-mini

On April 16, 2025, OpenAI introduced two new AI models—o3 and o4-mini—marking significant advancements in reasoning capabilities and tool integration within ChatGPT.

For the first time, our reasoning models can agentically use and combine every tool within ChatGPT

…

The combined power of state-of-the-art reasoning with full tool access translates into significantly stronger performance across academic benchmarks and real-world tasks, setting a new standard in both intelligence and usefulness.

OpenAI o3 and o4-Mini Are More Impressive Than I Expected

The article concludes that OpenAI’s o3 and o4-mini significantly advance AI capabilities, challenging Google’s dominance. These models integrate perception, action, and reasoning into a cohesive system, marking a new dimension in AI. Though not perfect, their capabilities and cost-effectiveness position them as strong competitors. Further testing is needed to fully grasp their potential and limitations.

o3 and o4-mini are the first AI systems to approach full interactivity across three layers: modalities (perception), tools (action), and disciplines (cognition). Senses, limbs, cortex.

In a way, this release marks the end of “AI model” as a useful category. We kept calling them models out of habit. But these should have been called systems all along.

OpenAI Codex CLI

The Codex CLI is open-sourced. Don't confuse yourself with the old Codex language model built by OpenAI many moons ago (this is understandably top of mind for you!). Within this context, Codex refers to the open-source agentic coding interface. [...]I like that the prompt describes OpenAI’s previous Codex language model as being from “many moons ago”. Prompt engineering is so weird.