AI Startup Survival Rules: Melvin Chen's Strategic Blueprint and Lovart's Practice

Introduction

3 hours and 44 minutes of conversation, one core question: How can AI startups survive in a game dominated by tech giants?

Lovart founder Melvin Chen’s answer is surprisingly clear—it’s not about capital, not about data, but about cognition, speed, and building your kingdom in places the giants “don’t care about."

In this deep conversation with Luo Yonghao, Chen systematically articulated survival rules for startups in the AI era. These insights come not just from his 10 years of practical experience across major internet companies, but from Lovart’s exploration in the AI design tools space.

Why is this article worth reading?

If you’re starting or planning to start a company in AI, or building AI products, this article will help you clarify:

- The three strategic pillars of AI entrepreneurship

- The true philosophy of “AI Native” products

- The three internal drivers founders must have

- Predictions about the future of the design industry

Podcast Information:

- Title: Lovart Founder Melvin Chen × Luo Yonghao! Let Me Shake the World, Then Walk Away | Melvin Chen: Let Me Shake the World, Then Walk Away

- YouTube: www.youtube.com/watch

- BiliBili: www.bilibili.com/video/BV1…

Executive Summary

💡 Core Insight: AI entrepreneurship isn’t about competing with giants on capital and data—it’s about building your kingdom with cognition, speed, and conviction in places they “overlook.”

This brief deeply analyzes AI design tool Lovart founder Melvin Chen’s core strategic thinking on how early-stage AI startups can achieve long-term survival and development. This strategic framework aims to avoid direct capital and data consumption wars with tech giants, instead carving out unique survival space through precise positioning and capability building.

Core strategic elements include:

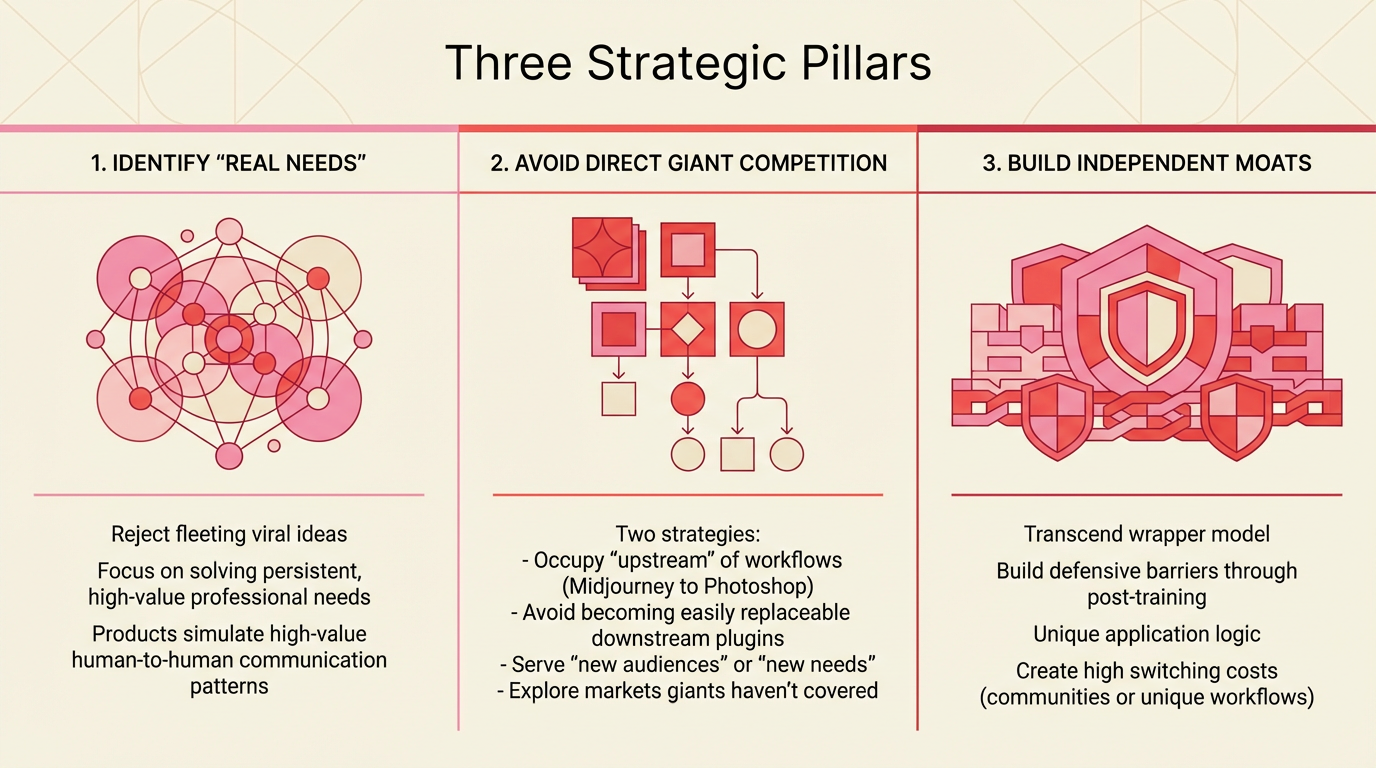

🎯 Three Strategic Pillars

-

Identify “Real Needs”: Reject fleeting viral ideas, focus on solving persistent, high-value “professional needs.” Products should simulate high-value human-to-human communication patterns.

-

Avoid Direct Giant Competition: Adopt two strategies—occupy the “upstream” of workflows (like Midjourney to Photoshop), avoid becoming easily replaceable downstream plugins; and serve “new audiences” or “new needs”, explore markets giants haven’t covered yet.

-

Build Independent Moats: Transcend the mere “wrapper” model relying on base models, build defensive barriers through post-training, unique application logic, and creating high switching costs (like communities or unique workflows).

🌱 “AI Native” Product Philosophy

True AI Native products have core experiences that “simply cannot exist” without AI, not just incremental improvements to existing software (like going from 60 to 80 points). The design philosophy is to create brand-new, AI-driven workflows and interaction modes.

💪 Founder’s Internal Drive

Founders must possess three internal qualities:

- Cognition: Insight into market opportunities earlier than giants

- Speed: Fastest iteration in the AI era

- Belief: 100% commitment to directions giants see as only 10% likely to succeed

🔮 Industry Future Predictions

Chen predicts AI will replace over 80% of designers who have skills but lack unique taste and creativity. Top designers' value will rise, not fall. This judgment is based on the assumption that fully generalized artificial intelligence (Full AGI) won’t be achieved in the next 5-10 years.

Chapter 1: Entrepreneurial Survival Architecture in the AI Era

Chen outlined a blueprint for AI startups aimed at long-term development, with core principles of avoiding giant crushing and building sustainable business models. This blueprint contains three interconnected strategic points.

1.1 Identify “Real Needs”: Solve Professional and Persistent Problems

To build an enduring company, not a short-term project to be sold, products must solve a persistent “real need,” not chase viral gimmicks.

What are real needs?

- ❌ Fleeting trends: AI-generated childhood photo albums, AI love letters—fun but unsustainable

- ✅ Persistent needs: Let clients communicate directly with “AI designers”—solves real business pain points

Three Principles:

-

Professional needs first: Strategic focus should be on “professional needs,” even if target users aren’t professionals in the traditional sense. This means your product solves a problem with professional standards and value.

-

Reject fleeting trends: Must reject those “clever” ideas that only trend for a few months—they can’t provide long-term value or form a sustainable business foundation.

-

Simulate human communication: A successful AI product should be able to replicate high-value human communication patterns. For example, Lovart’s design philosophy simulates the process of clients and designers collaborating and communicating in a shared visual space (like a whiteboard).

1.2 Avoid Giants: Find New Territory Upstream

Chen believes startups cannot survive in direct “vicious competition” with tech giants because the latter have absolute advantages in capital and data. Therefore, must adopt differentiation strategies.

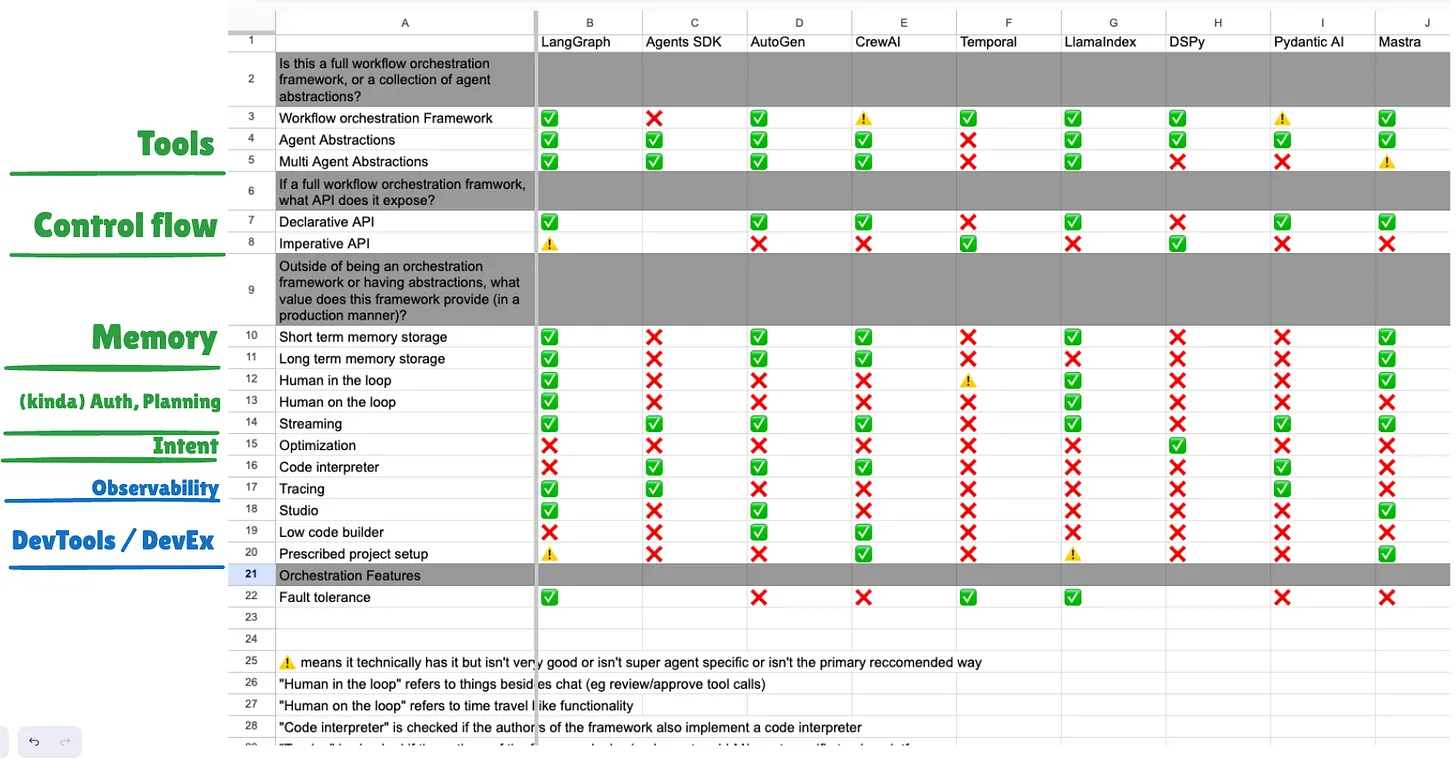

| Strategy | Description | Case Analysis |

|---|---|---|

| Occupy workflow “upstream” | Position product at workflow’s starting stage, not downstream. Downstream tools (like specific feature plugins) easily get absorbed or replaced by platform owners. | Midjourney’s success lies in being upstream of Photoshop. Users first generate core ideas in Midjourney, then enter Photoshop for refinement. This upstream positioning maintains independent value even when surrounded by giants. |

| Serve “new audiences” & “new needs” | Explore new user groups or unmet new needs that giants currently ignore. Rather than improving old experiences from “60 to 80 points,” create an AI-native experience “from 0 to 1.” | Canva’s success didn’t come from stealing Adobe’s professional designer users, but serving “new audiences” without professional skills but with social media posting needs. Similarly, Lovart aims to serve “clients” (marketing managers), letting them communicate directly with “AI designers” to meet their own professional design needs. |

1.3 Build Moats: Independent Capabilities Beyond “Wrappers”

To avoid “being destroyed by model evolution,” startups must transcend being merely base model “wrapper” applications and build unique, hard-to-replicate defensive barriers.

-

Transcend base models: While startups use base models like GPT or Midjourney, must perform post-training on top and build high-level application logic to provide unique user experiences.

-

Create high switching costs: Long-term value lies in building ecosystems users find hard to leave. Can be achieved through building communities or creating unique workflows (like Lovart’s infinite canvas). Even if giants later add similar AI features, high migration costs effectively retain users.

-

Tactical advantage of “nobody cares”: Startups should focus on businesses big companies “don’t care about” or “don’t quite believe in." When an opportunity seems only 10% likely to succeed to big companies, startups can invest 100% belief and speed to execute. By the time the giant (Final Boss) realizes its feasibility, the startup has already completed upgrades and built solid defensive fortifications.

Chapter 2: Defining “AI Native”: Product Philosophy of a New Species

Chen gives a clear definition of “AI Native” products: their core experience simply cannot exist without AI technology, not just incremental improvements from adding AI features to existing tools.

2.1 Fundamental Existence vs. Incremental Improvement

Grafting AI features onto “old world” software (like adding AI text processing to Notion or Feishu) doesn’t constitute AI Native. The core experiences of these software already existed—AI just improves them from “60 to 80 points.” True AI Native products have existence premised on AI capabilities.

2.2 Workflow Positioning: Upstream Strategic Advantage

A key part of AI Native strategy is occupying the “upstream” of workflows, not “downstream.”

-

Upstream exemplars: Tools like Midjourney and Liblib succeed because they’re at the starting point of creative workflows.

-

Downstream risks: If your product is positioned as a plugin or downstream editor for giant platforms like Photoshop, you’re highly likely to get absorbed or crushed by platform owners.

2.3 Serving New Audiences and New Needs

AI Native products should focus on previously unmet needs due to technical limitations or entirely new user groups. Lovart’s goal is to serve “clients,” letting them communicate directly with “AI designers”—this is a typical new need catalyzed by AI.

2.4 Interaction-Native: Restoring Human-to-Human Communication

A true AI Native product should fully leverage its hardware device characteristics and simulate natural interaction modes, not dogmatically understanding “native.”

-

Infinite Canvas: Lovart uses infinite canvas because it simulates natural human behavior of brainstorming and visual alignment on whiteboards—this is considered AI Native interaction design.

-

Touch Edit Feature: This feature lets users point at an object on screen (like a cup) with their finger, then tell AI in natural language how to modify it. This perfectly replicates the scene of clients giving designers revision feedback—behind it is advanced AI image understanding capability.

2.5 “Super Hybrid”: Recombinant Innovation

Chen describes AI Native products as a “super hybrid” that combines:

- Old world elements: Canvas, layers, editing tools

- New world AI: Generative models, understanding capabilities, interaction modes

- New species: Faster, better, lower learning cost

Key is recombination: Not creating entirely new components, but combining existing elements in novel ways to create unprecedented experiences.

Chapter 3: Founder’s Internal Drive: Cognition, Speed, and Belief

Chen emphasizes that in the AI era, a startup’s core moat isn’t just technology—it’s the founder’s three internal qualities.

3.1 Cognition: Insight Opportunities Early

Founders must have the ability to insight into market changes and potential opportunities earlier than big companies. This forward-looking cognition is the prerequisite for seizing time windows.

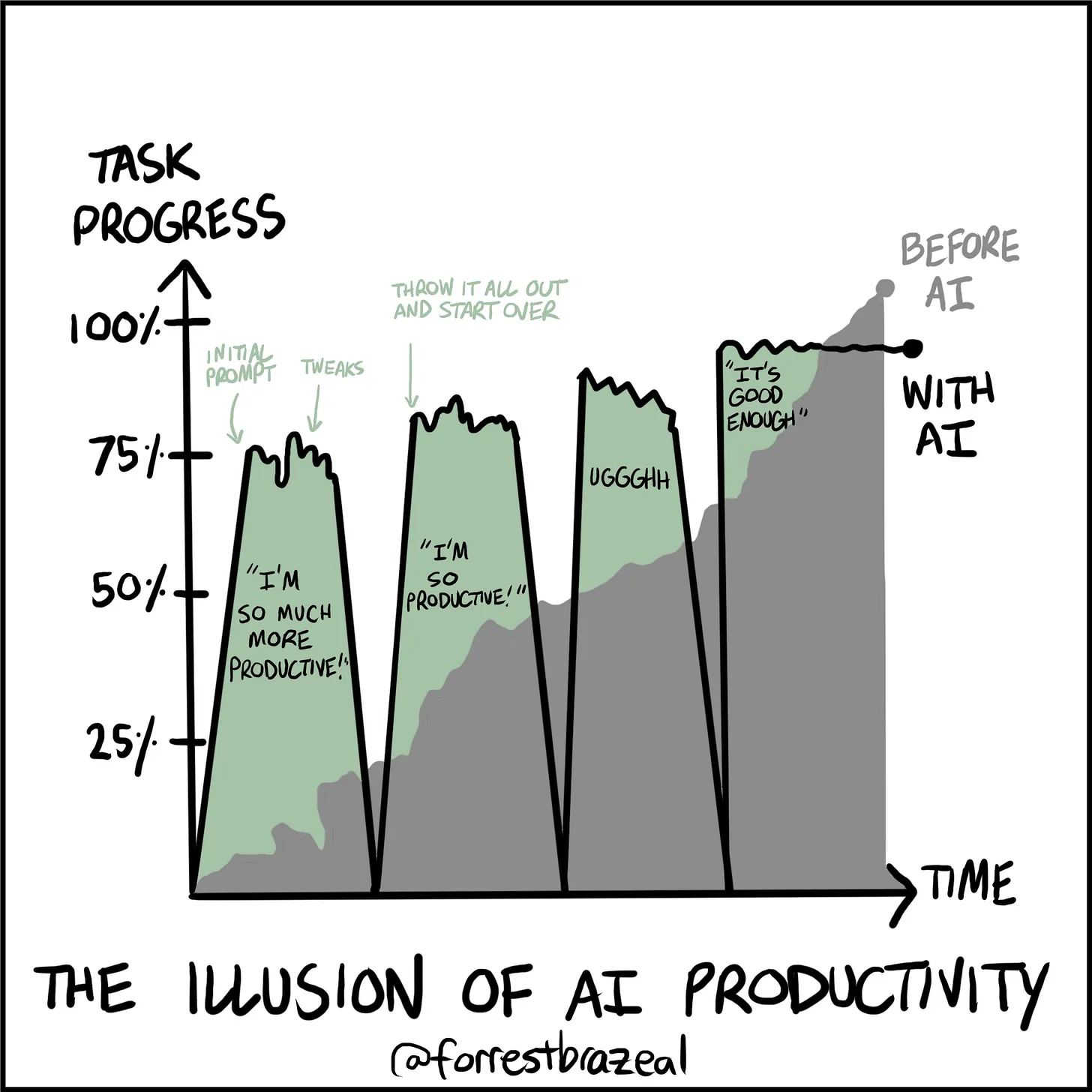

3.2 Speed: The Only Requirement in the AI Era

As base models destroy past engineering barriers, value creation points shift to rapid iteration of user interaction and vertical needs. In the AI era, “speed is all you need."

3.3 Belief: Bet on Low-Probability Futures

Founders must dare to invest 100% belief in directions that seem “low probability” (like 10% success rate). Big companies, due to rational decision-making, often choose higher success rate projects (like 80%), which precisely leaves disruptive innovation space for startups. Before a direction becomes consensus, the founder’s firm belief is the key force driving the team forward.

Chapter 4: Outlook on Design Industry and Future

Based on his understanding of AI development, Chen makes clear predictions about the design industry and even human-AI relationships.

4.1 Design Industry Disruption: 80% of Practitioners Will Be Replaced

He predicts AI will replace over 80% of designers, especially those “with only skills, no ideas, no taste." These homogenized, execution-based design tasks will be efficiently completed by AI.

At the same time, top designers who can provide “soul,” unique aesthetics, and creative direction to AI will become more valuable and more expensive.

4.2 Judgment on AGI: Core Value of Human Taste

Chen’s strategic judgment is based on a core assumption: Fully General Artificial Intelligence (Full AGI) will not be achieved in the next 5-10 years.

He believes that as long as AI remains at the stage of “infinitely approaching humans” but not yet comprehensively surpassing humans in emotion and creativity, human taste and intervention will always be the final deciding factor distinguishing mediocre from excellent results—this is also the core of human value.

Core Concept Analogies

| Analogy | Description |

|---|---|

| Planting a special fruit tree in a giant forest | AI entrepreneurship is like surviving in a forest of giants. The fruit must be something people need daily (real need), the planting location can’t be under giant trees' shadows (avoid giants), but find new soil (new audiences). Finally, must root deep, unique roots (independent moat) to withstand storms. |

| Traditional car vs. Tesla | Adding AI features to old software is like adding a small motor to a fuel car—it improves but essence unchanged. AI Native products are like Tesla—design revolves around batteries and software (AI core) from the start. Without this core, its unique architecture and features couldn’t exist. |

| Grinding levels in a game | AI entrepreneurship is like playing a high-risk game. Early must quickly “fight small monsters” (serve niche needs) to level up. If you attract “Final Boss” (tech giant) attention too early, you get insta-killed. Only by quickly leveling and building “solid fortresses” (community and unique workflow moats) can you win final victory. |

My Reflections

After listening to this conversation, my biggest takeaway is: AI-era entrepreneurship isn’t about competing on technology—it’s about competing on cognition.

Why This Conversation Matters

In the current frenetic atmosphere of “AI entrepreneurship = wrapping GPT,” Chen’s thinking appears exceptionally clear. He didn’t talk about how to quickly raise funding or do viral marketing, but focused on a fundamental question: When you can’t compete with giants on resources, what gives you the right to exist?

The answer to this question isn’t “more advanced algorithms” or “more flashy features,” but rather:

- Have you solved real needs others don’t understand or overlook?

- Have you occupied a workflow position that can’t be easily replaced?

- Have you built stickiness that makes users hard to leave?

Rethinking “Real Needs”

Chen’s definition of “real needs” made me reflect on many product directions. Are we too easily attracted by surface heat, ignoring those seemingly unsexy but truly persistent needs?

Lovart’s choice to serve “clients” rather than “designers” is very clever positioning. It’s not making a “better design tool,” but solving a completely different problem: how to let people who don’t understand design also obtain professional-level communication ability.

This is truly AI Native—not because it uses AI models, but because AI makes a previously impossible scenario possible.

About “80% of Designers Will Be Replaced”

This judgment seems cruel, but think carefully—every technological revolution in history was like this. Photography replaced portrait painters, but photographers became a new profession. Computers replaced human calculators, but programmers became a new profession.

Key isn’t “replacement,” but “evolution." If you’re just an executor, you’ll indeed be replaced. But if you can provide unique aesthetics, creativity, and judgment, AI will become your most powerful amplifier.

The Power of Belief

The part about “belief” moved me most. Big companies rationally calculate probabilities, choosing 80% success rate safe directions; but what entrepreneurs must do is precisely those things with only 10% success rate but enormous value once successful.

This asymmetry is exactly where entrepreneurial opportunities lie. When no one believes, you execute with 100% belief. By the time it becomes consensus, you’ve already built insurmountable advantages.

Final Advice:

Don’t fear giants. As Chen said, in those places with “only 10% success rate,” lies your greatest opportunity. While big companies are still watching from the sidelines, you’ve already built your kingdom with speed and belief.

This is the entrepreneurial rule of the AI era: in places others don’t see, with speed others can’t match, do things others dare not believe.